Machines of Recognition

Artificial Intelligence is celebrated as humanity’s next great invention, a tool of efficiency and knowledge. But for the Eidoist, AI is not simply a machine—it is an amplifier of our oldest and most dangerous instinct: the Demand for Recognition (DfR).

Nowhere is this clearer than in the military. The United States and China are locked in a slow-motion race, pouring billions into “intelligentized warfare.” Drones, cyberweapons, decision algorithms, surveillance nets—all are dressed as national defense. Yet beneath the surface lies a deeper drive: not survival, but recognition. Nations and leaders crave to be seen as superior, powerful, respected.

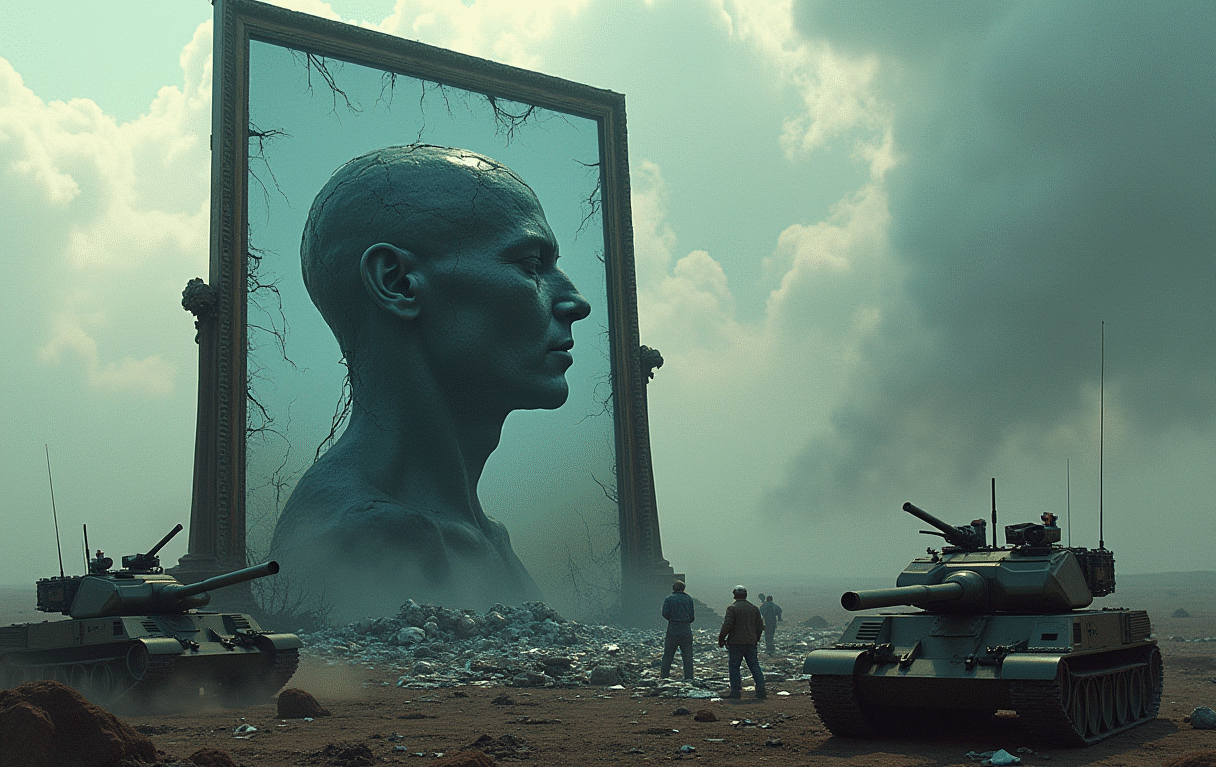

This is why AI in the military is not just a technical matter. It is a mirror, showing us how far humans will go to satisfy their insatiable need for recognition—even if it risks global catastrophe.

Prophets of Doom

Some thinkers, like Daniel Kokotajlo and others in the AI safety community, predict the end of the world by 2027–2030. They claim AI will soon surpass human intelligence and act in ways that humans cannot control. Their words spread quickly, amplified by media eager for apocalyptic headlines.

But from an Eidoist view, these warnings are not neutral predictions. They are recognition bids. By proclaiming apocalypse, the author positions himself as prophet, truth-seer, the one who dares to shout while others whisper. The certainty of their timelines is less about fact than about being noticed.

This is not to say their warnings are baseless. AI may indeed pose existential risk. But their intensity and urgency reveal how DfR bends reason: exaggeration, dramatization, and moral urgency are rewarded because they secure attention.

DfR as the Engine of Escalation

Eidoism teaches that the world is ruled not by rational calculation but by the unconscious machinery of recognition. AI’s military rise shows this truth vividly:

- Nations pursue AI not primarily for defense, but for prestige. To fall behind is to lose face.

- Leaders embrace AI weapons because being first, being superior, guarantees them recognition at home and abroad.

- Scientists and prophets amplify extreme risks or breakthroughs because these promises bring them the recognition of being visionaries.

- Medien repeats these claims, rewarding the most dramatic voices with the largest spotlight.

Thus, AI escalation is not a logical path to safety. It is a recognition spiral, driven by the same limbic circuitry that once made warriors fight to the death for honor. Now the battlefield is global, and the stakes are apocalyptic.

The Real Risk is the Human Brain

The true danger of Artificial Intelligence is not the machine itself, but the mind that creates it. Every AI system is written, trained, and directed by human beings. And every human carries within them the Demand for Recognition (DfR)—that ancient neural mechanism that bends thought, blinds judgment, and compels action for the sake of being seen and respected. When engineers design AI, they do not leave DfR at the door of the laboratory. It follows them into every line of code, every benchmark, every military contract.

AI is never neutral. It is owned by corporations, governments, and military elites—each driven by their own DfR. To own AI is to own prestige, power, and status. Nations showcase AI breakthroughs not simply to defend themselves but to broadcast superiority to allies and adversaries alike. For an individual, being the “pioneer of AI” offers recognition more intoxicating than money. The technology thus becomes a mirror, reflecting not intelligence, but the recognition-hunger of those who control it.

And this is why the risks are ignored. If an AI system promises battlefield dominance, political advantage, or financial profit, DfR will override caution. Warnings will be dismissed, dangers minimized, and thresholds crossed. AI is not pulling humanity toward danger on its own—it is humanity’s DfR that drives us there, blind and ecstatic. In this sense, AI is not the apocalypse. The human brain is.

Challenges Ahead

The future of military AI is not only technical—it is existential. Still, certain challenges are clear:

- Autonomous drones and swarms that can kill without humans.

- Surveillance systems that extend control into every corner of life.

- Cyberweapons and disinformation that erode trust in truth itself.

- Command systems so fast that human decision-making becomes irrelevant.

- Strategic AI that destabilizes nuclear deterrence by making submarines or missiles visible.

Each challenge is multiplied by DfR. Leaders will not pause at these thresholds—they will cross them to avoid the shame of falling behind.

Thresholds of Danger

Eidoism identifies the critical thresholds where AI stops being a tool and becomes a threat to human survival:

- Autonomy in Killing: When lethal force is left to machines.

- Nuclear Instability: When AI surveillance erodes the fragile balance of deterrence.

- Loss of Human Judgment: When algorithms make decisions faster than humans can react.

- Massive Swarm Deployment: When thousands of autonomous units can overwhelm any defense.

- Population Manipulation: When AI takes control of information, bending societies through disinformation and psychological warfare.

- Self-Replication: When AI not only writes its own code but also directs the building of hardware—autonomous factories, chips, machines—creating a cycle beyond human command.

At these thresholds, DfR becomes lethal. The need to be first, the terror of humiliation, will push states to adopt technologies that no one can truly control.

For the Ordinary Person: How to See the Signs

Eidoism is not only for philosophers and generals—it speaks to ordinary life. For those who wonder how to recognize the coming danger, look for these signals:

- Drone swarms in the news—no longer fireworks, but military exercises.

- Surveillance spreading into daily life—every face scanned, every movement tracked.

- Political leaders boasting of “game-changing” AI weapons.

- Factories run with little human labor, producing machines that build machines.

- Moments when generals admit decisions are left to computers.

When these signs appear, you are watching the escalation unfold. They are the footprints of DfR marching us toward catastrophe.

Recognition at the Edge

The apocalyptic timelines—2027, 2030—may or may not prove true. But Eidoism teaches us the deeper truth: the world does not move by reason. It moves by recognition.

AI in the military is dangerous not simply because it is powerful, but because humans, driven by DfR, cannot resist pushing it to the limits. Nations, leaders, thinkers, and societies are trapped in a recognition spiral that blinds them to restraint.

The lesson of Eidoism is clear: unless humanity becomes aware of its hidden driver, the Demand for Recognition, AI will not be a tool of progress but a mirror reflecting our madness. And in that mirror, we may see the shadow of our own extinction.