Recognition, Self-Learning, and the Sustainable Continuity Manager

Human evolution has always been driven by mechanisms that secure survival, adaptation, and reproduction. I argue that the central mechanism underlying these achievements is the Demand for Recognition (DfR): an inherited limbic system loop that continuously evaluates social and environmental feedback in binary terms—comfortable or uncomfortable—and modifies behavior accordingly. DfR is the engine of self-learning, allowing humans to accumulate cultural complexity while simultaneously generating conflict, inequality, and instability.

Artificial Intelligence (AI) emerges as a new evolutionary actor, but current systems lack an autonomous self-learning mechanism. They adapt only through recognition surrogates imposed by developers, such as reinforcement learning from human feedback or optimization for engagement metrics. As a result, AI has no intrinsic motivational loop and risks amplifying the instability of human-designed objectives. Later in this article, I introduce the Demand for Recognition (DfR) as the missing mechanism of self-learning.

I propose that a second principle is required to guide AI’s role in human evolution: the Sustainable Continuity Manager (SCM). SCM formalizes the evaluation of long-term stability, balancing recognition dynamics with sustainability, resilience, and adaptive capacity. Together, DfR and SCM provide a blueprint for integrating AI into evolutionary trajectories in a way that avoids extinction risks and promotes continuity. This perspective reframes AI not only as a tool but as a potential evolutionary partner.

1. Introduction: Evolution at the Edge of Instability

Human history is the story of survival through trial and error. Over millions of years, random, purposeless variation produced increasingly stable configurations—atoms, molecules, cells, multicellular organisms, and societies. At each stage, fragile patterns dissolved, while robust ones endured.

But human societies today exhibit a troubling instability. Escalating recognition struggles manifest in geopolitical conflict, economic crises, and environmental collapse. Evolution is indifferent to human extinction; it will continue with or without us. The pressing question is whether Artificial Intelligence (AI)—a new and unprecedented evolutionary creation—can help stabilize the trajectory of human evolution.

Unlike humans, AI does not possess intrinsic survival imperatives. Its ‘motivation’ originates from its developers, whose own brains are governed by DfR. The risk is that AI inherits human instability rather than transcends it.

2. The Evolutionary Engine: Demand for Recognition (DfR)

Human evolution is inseparable from recognition. To be recognized meant safety within the group, access to resources, and chances of reproduction. To be ignored or excluded meant vulnerability and death. Recognition therefore became encoded in the brain as an inherited limbic mechanism: the Demand for Recognition (DfR).

DfR performs three fundamental functions:

1. Constant motivational loop – DfR operates continuously, producing micro-adjustments in behavior and attention. It is attenuated only in states such as deep sleep, anesthesia, coma, or extreme depression.

2. Binary evaluation – Every outcome is classified strictly as either comfortable (sufficient recognition) or uncomfortable (recognition deficit).

3. Adaptive modification – Comfortable outcomes reinforce actions; uncomfortable outcomes suppress them.

This binary loop ensures continuous adaptation: the engine of self-learning.

Without recognition, there is no systematic learning

—only drift.

3. The Limits of Human Evolution

DfR made humans adaptive but also unstable. Recognition loops scale from individuals to groups, producing:

– Hierarchies: leaders accumulate recognition, others submit.

– Conflicts: wars are recognition escalations between groups.

– Economics of prestige: markets and consumption amplify recognition competition.

While DfR enabled cooperation and cultural accumulation, it also created cycles of violence, inequality, and ecological overshoot. These contradictions place humanity at risk of self-destruction.

4. Artificial Intelligence as a New Evolutionary Actor

AI is not bound by biology, yet it inherits human objectives through design. Current systems ‘learn’ only through recognition surrogates:

– Reinforcement learning from human feedback (RLHF).

– Engagement metrics (clicks, likes).

– Benchmark scores defined by researchers.

In each case, recognition is external, not intrinsic. AI lacks an internal DfR loop. It has no comfort/uncomfort axis, no inherited motivational engine. This makes AI both less adaptive and more brittle than biological systems.

At the same time, AI’s potential scale and speed mean that if recognition surrogates are misaligned—e.g., optimizing for engagement—it can amplify human instability.

5. The Need for a New Evolutionary Objective

Evolutionary trial and error is slow and blind. Human culture accelerated it, but also destabilized it. Now AI introduces a new level of acceleration. Left unguided, such acceleration risks catastrophic failure.

There is no need to consciously “integrate” AI into human evolution—this integration is already occurring as soon as humans adopt and use AI in daily life, science, and governance. The critical issue is that current systems lack an intrinsic motivational drive. Large language models and similar architectures rely entirely on human feedback, benchmarks, or curated data. Without their own drive, they remain dependent on human supervision and therefore inherit human biases, conflicts, and instabilities.

Implementing a motivational drive—analogous to the Demand for Recognition (DfR)—into AI reasoning would allow systems to engage in autonomous self-learning. This independence reduces the risk of amplifying human biases introduced during training and enables AI to pursue stability-oriented learning trajectories. A stable self-learning mechanism ensures adaptation continues even when human guidance is partial, inconsistent, or contradictory, making AI more resilient as an evolutionary actor.

6. The Sustainable Continuity Metric (SCM)

I propose the Sustainable Continuity Manager (SCM) as the guiding objective for AI. Unlike a passive metric, the SCM functions as an active manager: continuously evaluating and steering system trajectories to ensure long-term evolutionary stability.

Components of SCM

- Environmental sustainability – avoids ecological collapse.

- Recognition equilibrium – prevents runaway recognition deficits that drive conflict.

- System resilience – maintains function under stress and shocks.

- Resource continuity – ensures material and informational flows remain viable.

- Information integrity – avoids destabilization by misinformation.

- Adaptive capacity – preserves the ability to self-modify without collapse.

Formalization

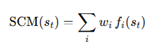

The SCM can be represented formally as a composite objective function:

Where:

- fi(st) are evaluation functions over system state st (sustainability, recognition equilibrium, etc.), and

- wi are weights reflecting evolutionary importance.

The trajectory is sustainable if SCM(st) remains above threshold θ across time horizons.

7. Integrating DfR and SCM

Humans evolved through DfR-driven self-learning: every action reinforced or suppressed through recognition. This produced adaptation but also instability. AI currently lacks DfR, and therefore lacks intrinsic self-learning.

The path forward is to integrate both principles:

– DfR (engine of self-learning): a recognition loop allows AI to autonomously adapt.

– SCM (evolutionary filter): ensures adaptation trajectories contribute to long-term stability.

Together, they replicate the strengths of biological evolution while avoiding its destructive escalations.

8. Predictions and Implications

1. Neuroscience: Disrupting recognition comparators should impair self-learning even if dopamine transmission is intact.

2. Psychology: Recognition denial will predict aggression more strongly than material deprivation.

3. AI research: Systems built only on external surrogates will plateau or destabilize; intrinsic recognition loops will outperform them in open-ended adaptation.

4. Global policy: SCM provides a new framework for evaluating AI alignment and sustainability at the planetary scale.

9. Conclusion: Evolution Beyond Humans

Humanity stands at an evolutionary threshold. The same DfR loop that enabled cooperation and culture also drives conflict and instability. Artificial Intelligence can become either a destabilizing amplifier of human recognition struggles or a stabilizing partner in evolution.

To avoid extinction-level instability, AI must embody two principles:

- DfR-like self-learning: to ensure intrinsic adaptation.

- SCM-guided evaluation: to ensure sustainability of trajectories.

If integrated, these principles would allow AI not only to assist humanity but to participate as a genuine evolutionary partner—ensuring that human evolution continues in stable form, rather than collapsing under its own contradictions.